Introduction

My e‑portfolio tells the story of my journey through my latest module, Machine Learning. Every unit is broken down with Gibbs’ Reflective Cycle (The University of Edinburgh, 2024), and I finish with a big‑picture reflection that also borrows the “What? So what? Now what?” steps from Rolfe et al. (2001). Walland & Shaw (2020) go on and tell us that an e‑portfolio is both process and product, so you’ll see my messy thinking as well as my polished results.

Reflection Approach: Gibbs’ Reflective Cycle

Why Gibbs’ Reflective Cycle? Because it is well rounded and has successfully helped me through several modules now. The cycle involves six key steps:

Gibbs’ Reflective Cycle keeps me honest: I describe, feel, evaluate, analyse, conclude, action‑plan – no corners cut throughout each unit.

Reflection on Each Unit

Unit 1 – Introduction to Machine Learning

Unit 2 – Exploratory Data Analysis

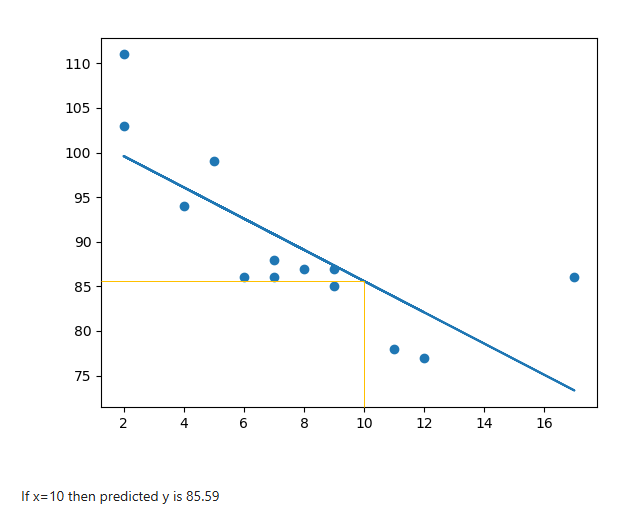

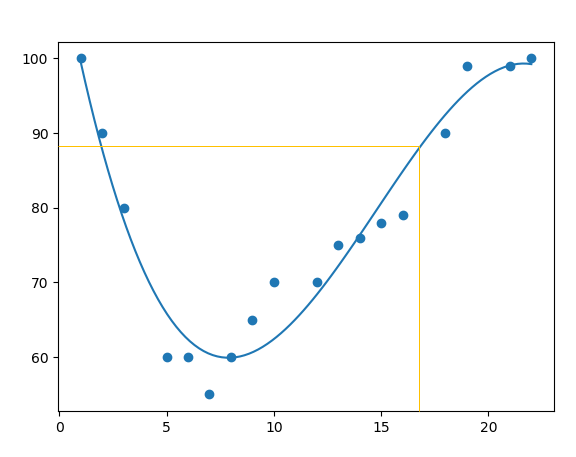

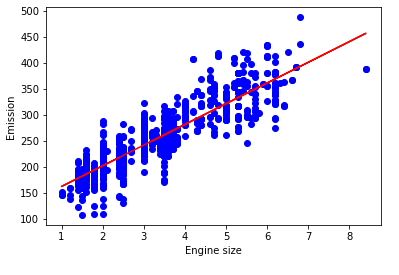

Unit 3 – Correlation & Regression

Unit 4 – Linear Regression with Scikit‑Learn

Unit 5 – Clustering

Unit 6 – Clustering with Python (Team Project)

Unit 7 – Intro to Artificial Neural Networks (ANN)

Unit 8 – Training an ANN

Unit 9 – Intro to CNNs

Unit 10 – Natural Language Processing

Unit 11 – Model Selection & Evaluation (Presentation)

Unit 12 – Industry 4.0 & ML

Reflection Piece

What? Summary of journey

Twelve units later and I have taken another step in my algorithm skills, but just as importantly, I have seen where I still need to learn. The module felt familiar, as I have several years of experience in Machine Learning. Though the module brought new ideas to the table that I have not dived deep enough into throughout my career.

I am proud of what I delivered. From investigating and reporting on a famous IT outage to building my first practical CNN project for a company I work for. I have learnt a lot this module. Each module introduced me to insights into neural networks I had yet to discover. Though I do need to dive deeper into large language models (LLM) and transformers myself. I have more confidence with machine learning than before, and now I know the importance of taking ethics and communication into account.

As Walland & Shaw (2020) insist, an e‑portfolio is about the process behind the artefacts. So, the real story sits in the invisible layers: late‑night debugging, bad ping, “A‑ha!” moments when domain context finally clicks, and that performance anxiety during live presentations.

So what? Digging into growth, pain points, and patterns

| Theme | Wins | Learning Opportunity | Evidence / Trigger |

|---|---|---|---|

| Technical depth | CNN accuracy > 80 %; wrote clean modular code. No issues with code throughout module | Limited exposure to LLMs and transformers | Unit 9 notebook and unit 11 project show programming skill |

| Ethical & resilience lens | Started “red‑team” mindset; added fall‑back design to dashboards | Missed the unit 8 collaboration discussion | Unit 11 project shows growth in ethical lens and red-team mindset |

| Communication / storytelling | Reports clearer than last module. | Saw how far I still have to go when I had to present the slideshow to executives not familiar with machine learning | Feedback from unit 1 collaboration discussion. Unit 6 team project feedback from team when handing over ideas and notebook |

| Collaboration | Smooth and enjoyable pairing with team during unit 6 team project | Due to work commitments, I had the voluntary option to assist with the reporting side of the project after completing the coding side. I was unable to make it due to time constraints. | Team feedback and all following each other on LinkedIn. |

| Time‑management | The team and i finished unit 6 by the end of unit 5. Ahead of schedule early doors | Work crisis caused university to take a back foot for a few weeks. Had to pull late nights to catch up. | Unit 6 being handed in several days early by team |

| Reflection quality | Used Gibbs (The University of Edinburgh, 2024) consistently; action plans more in depth | Storytelling still needs work | See e-portfolio website on latest machine learning module |

Key Insights

- 1. My default mental model is build first, narrate later. Will work on domain context first.

- 2. Ethics and resilience need to be taken into account. Important i see likely hood of my project failing and who it may affect.

- 3. Live presentations are my new bottleneck. Though my storytelling still needs work, live presenting gives me a lot of nerves.

Now What? Concrete roadmap

| Goal | Method / Tool | Success Metric | Due Date |

|---|---|---|---|

| LLM and Transformer experience | Create, using fake data, a simple LLM for my company | Get colleagues to chat to it | 2025-08-31 |

| Improve ethics & resilience | Implement ethical checks for all my teams projects as well as resilience checks. | Get team to do the same. Discuss at next months meeting | 2025-08-04 |

| Presentation practise | Improve performance anxiety and presentation skill | Present to team with face camera on during meetings | 2025-08-04 |

| Wordiness trim | Improve storytelling and reporting | Get colleagues and my partner to read over work projects and personal/university projects respectively. The only way to improve is to be open to criticism | 2025-08-31 |

| Time Management | Improve planning, expect work fallbacks | Get ahead of next module but also set university time aside no matter the work commitments. | 2025-09-30 |

Professional Skills Matrix & Evidence of Development

| Skill / Competency | How I Developed It in the ML Module | Concrete Evidence / Artefact |

|---|---|---|

| Machine‑Learning Modelling | Implemented regression, clustering, ANN, CNN; tuned hyper‑parameters and compared models | Unit 6 and 11 projects |

| Resilience & Ethics | EDA now includes resilience and ethic checks | Unit 6 and 11 projects |

| Data Visualisation & Storytelling | Created exec‑friendly slides; compared models visually; Delivered 20‑min talk to non‑tech execs; peer‑feedback dialogues; | Unit 1 collaboration discussion and Unit 6 and 11 projects |

| Collaboration & Leadership | Led workable prodotype in unit 6 project and worked alongside team of fellow experts | Unit 6 project |

Conclusion

I started the module feeling like “This is my domain, machine learning is my day job.” Halfway in I realized I have not been emphasising resilience and ethics. By Unit 11, before presenting to execs, I felt the weight of translation: code alone won’t move the needle; stories do.

Am I satisfied? Mostly. My technical bar rose; my communication bar nudged upward, my ethics bar has begun to grow. But i still have a long way to go. Reflections are only as good as action. I have added all actions to my to do list and I will complete them.

References

The University of Edinburgh (2024) Reflection Toolkit. Gibbs' Reflective Cycle. Available from: https://reflection.ed.ac.uk/reflectors-toolkit/reflecting-on-experience/gibbs-reflective-cycle [Accessed 10 April 2025]

Rolfe, G., Freshwater, D., & Jasper, M. (2001). Critical Reflection for Nursing and the Helping Professions: A User's Guide. Available at: Critical reflection for nursing and the helping professions : a user's guide : Rolfe, Gary : Free Download, Borrow, and Streaming : Internet Archive [Accessed 10 April 2025]

Walland, E., Shaw, S. (2020) E-portfolios in teaching, learning and assessment: tensions in theory and praxis. Available from: https://www.tandfonline.com/doi/full/10.1080/1475939X.2022.2074087#abstract [Accessed 10 April 2025]